Top 5 Open-Source AI Image Generation Projects on GitHub

This article introduces 5 outstanding open-source AI image generation projects on GitHub, highlighting their key features, use cases, and installation methods to help users choose the right tool.

In today’s rapidly evolving AI landscape, AI image generation technology is revolutionizing the creative and design industries. These advancements not only provide artists and designers with new tools but also open up revolutionary applications across various sectors. Open-source projects play a crucial role in this exciting field, driving innovation and democratization of the technology. In this article, we’ll introduce five outstanding AI image generation projects and tools from GitHub, which are not only powerful but are also shaping the future of the industry.

Stable Diffusion

Stable Diffusion, developed by Stability AI, is a powerful text-to-image generation model capable of producing high-quality and creative images based on text descriptions. Project URL: https://github.com/CompVis/stable-diffusion

Key Features and Capabilities

- High-quality image generation: Capable of producing detailed images at 512x512 resolution

- Text-to-image: Generates images based on textual descriptions

- Image-to-image: Modifies or enhances existing images

- Supports multiple artistic styles and themes

Use Cases

- Artistic creation

- Advertising design

- Game development

- Concept art

Installation and Usage

You can install it via pip or use Docker image. The basic usage involves providing a text prompt, and the model will generate the corresponding image.

Project Highlights

The open-source nature of the project allows the community to continuously improve and customize the model, leading to the creation of various innovative applications and variants.

DALL-E Mini (Now rebranded as Craiyon)

DALL-E Mini is an open-source alternative to OpenAI's DALL-E, capable of generating images based on textual descriptions. Project URL: https://github.com/borisdayma/dalle-mini

Key Features and Capabilities

- Text-to-image generation

- Batch image generation

- Relatively small model size, easy to deploy

Use Cases

- Quick concept visualization

- Creative inspiration

- Education and research

Installation and Usage

It can be run using a Google Colab notebook or a local Python environment. It is easy to use: simply input a text description, and the model will generate an image.

Project Highlights

Despite being relatively simple, the model is fast, making it ideal for rapid prototyping and creative exploration.

StyleGAN3

StyleGAN3 is the latest generation of GAN models developed by NVIDIA’s research team, focusing on high-quality image generation and style transfer. Project URL: https://github.com/NVlabs/stylegan3

Key Features and Capabilities

- Extremely high-quality image generation

- Improved image consistency and detail

- Real-time video generation support

- Better control and editability

Use Cases

- High-end visual effects production

- Virtual reality (VR) and augmented reality (AR) content creation

- Fashion and product design

Installation and Usage

It requires NVIDIA GPU support. You can clone the project via Git, and follow the documentation for setup and training.

Project Highlights

StyleGAN3 sets a new standard in image quality and diversity, making it ideal for applications requiring high levels of realism.

Hugging Face Diffusers

Hugging Face Diffusers is a toolkit for state-of-the-art diffusion models, supporting a variety of pre-trained models. Project URL: https://github.com/huggingface/diffusers

Key Features and Capabilities

- Supports multiple diffusion models, including Stable Diffusion

- Easy-to-use API

- Extensive library of pre-trained models

- Supports model fine-tuning and customization

Use Cases

- Research and experimentation

- Rapid prototyping

- Integration into existing AI pipelines

Installation and Usage

You can install it via pip. The library provides a simple Python API, making it easy to start using different diffusion models.

Project Highlights

The strength of Hugging Face Diffusers lies in its broad model support and active developer community, making it a key tool in AI image generation.

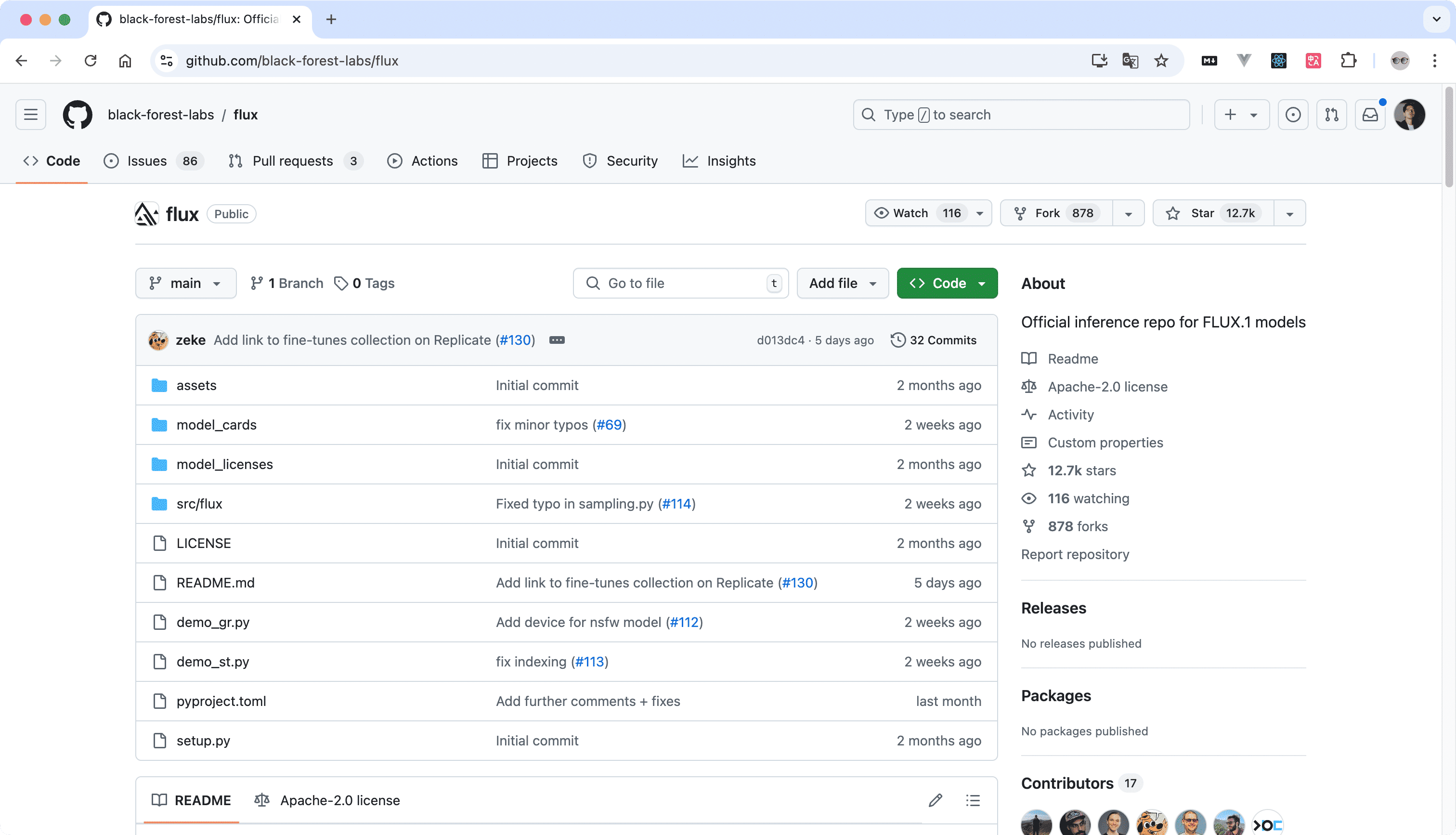

Flux

Flux (FLUX.1), developed by Black Forest Labs, is an advanced AI image generation model based on Latent Rectified Flow Transformers, offering high-quality, fast image generation. Project URL: https://github.com/black-forest-labs/flux

Key Features and Capabilities

- High-quality image generation: Produces visually impressive, detail-rich images

- Fast generation: Significantly faster than many other models

- Text-to-image: Generates images based on textual descriptions

- Image-to-image: Supports image editing and generation from input images

- Excellent prompt following capability: Accurately understands and executes complex text prompts

Use Cases

- Creative design and art production

- Advertising and marketing material generation

- Concept art and prototyping

- Game and entertainment content creation

- Research and experimentation

Installation and Usage

You can clone the project via GitHub and follow these steps for installation:

git clone https://github.com/black-forest-labs/flux

cd flux

pip install -e '.[all]'The model can be used via a Python API or command-line interface for image generation.

Project Highlights

Flux strikes an excellent balance between generation speed and image quality, while maintaining strong capabilities in understanding and executing complex prompts.

Comparison of the 5 Projects/Tools

Project/Tool | Pros | Cons |

Stable Diffusion | Versatile, high-quality generation | High resource requirements |

DALL-E Mini | Lightweight, easy to use | Lower image quality |

StyleGAN3 | Extremely high-quality images, video support | Steep learning curve, requires powerful hardware |

Hugging Face Diffusers | Diverse models, flexible usage | May require technical background |

Flux | Fast generation, high-quality images, strong prompt adherence | As a new project, it may still be evolving |

How to Choose the Right AI Image Generation Project/Tool

- Consider your technical skill level and hardware capabilities

- Define your use case (research, creation, entertainment, etc.)

- Evaluate the balance between image quality and generation speed

Key Considerations for Using These Open-Source Projects

- Adhere to open-source license agreements

- Be mindful of model biases and ethical concerns

- Regularly update to get the latest features and security fixes

How to Contribute to Open-Source AI Image Generation Projects

- Learn the relevant tech stack

- Participate in project discussions and report issues

- Submit code improvements or new features

Conclusion

The contributions from the open-source community play a crucial role in advancing and popularizing AI image generation technology, paving the way for future innovations.These powerful open-source AI image generation projects not only showcase the current capabilities of the technology but also hint at limitless possibilities in the future. Whether you're a professional creator, developer, or AI enthusiast, these tools open up a world of creativity and innovation. We encourage you to explore these projects, experience the magic of AI image generation, and perhaps become a contributor to the future of this exciting field.

Learn more: